TIPS之 Kubernetes HPA 失效问题排查

现象

$ kubectl get hpa -A

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

xxxxxx-apiserver Deployment/xxxxxx-apiserver <UNKOWN>/10% 1 6 6 6h36m

$ kubectl top node

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

$ kubectl top pod

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io)

问题追查

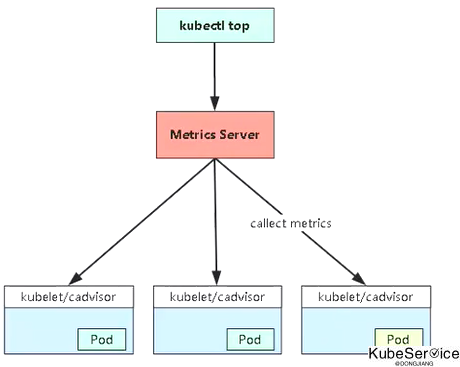

查看metrics-server的问题:

$ kubectl logs metrics-server-5543ab8f -n kube-system

E1115 01:21:33.287357 1 manager.go:111] unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:k8s-node03: unable to fetch metrics from Kubelet k8s-node03 (172.16.135.13): Get http://172.16.135.13:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.13:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node01: unable to fetch metrics from Kubelet k8s-node01 (172.16.135.11): Get http://172.16.135.11:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.11:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-master01: unable to fetch metrics from Kubelet k8s-master01 (172.16.135.10): Get http://172.16.135.10:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.10:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node02: unable to fetch metrics from Kubelet k8s-node02 (172.16.135.12): Get http://172.16.135.12:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.12:10255: connect: connection refused]

E1115 01:22:03.279839 1 manager.go:111] unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:k8s-master01: unable to fetch metrics from Kubelet k8s-master01 (172.16.135.10): Get http://172.16.135.10:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.10:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node01: unable to fetch metrics from Kubelet k8s-node01 (172.16.135.11): Get http://172.16.135.11:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.11:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node02: unable to fetch metrics from Kubelet k8s-node02 (172.16.135.12): Get http://172.16.135.12:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.12:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node03: unable to fetch metrics from Kubelet k8s-node03 (172.16.135.13): Get http://172.16.135.13:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.13:10255: connect: connection refused]

E1115 01:22:33.276133 1 manager.go:111] unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:k8s-node03: unable to fetch metrics from Kubelet k8s-node03 (172.16.135.13): Get http://172.16.135.13:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.13:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node01: unable to fetch metrics from Kubelet k8s-node01 (172.16.135.11): Get http://172.16.135.11:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.11:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node02: unable to fetch metrics from Kubelet k8s-node02 (172.16.135.12): Get http://172.16.135.12:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.12:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-master01: unable to fetch metrics from Kubelet k8s-master01 (172.16.135.10): Get http://172.16.135.10:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.10:10255: connect: connection refused]

E1115 01:23:03.277724 1 manager.go:111] unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:k8s-node02: unable to fetch metrics from Kubelet k8s-node02 (172.16.135.12): Get http://172.16.135.12:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.12:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node03: unable to fetch metrics from Kubelet k8s-node03 (172.16.135.13): Get http://172.16.135.13:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.13:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-node01: unable to fetch metrics from Kubelet k8s-node01 (172.16.135.11): Get http://172.16.135.11:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.11:10255: connect: connection refused, unable to fully scrape metrics from source kubelet_summary:k8s-master01: unable to fetch metrics from Kubelet k8s-master01 (172.16.135.10): Get http://172.16.135.10:10255/stats/summary?only_cpu_and_memory=true: dial tcp 172.16.135.10:10255: connect: connection refused]

$ kubectl describe apiservice v1beta1.metrics.k8s.io

Name: v1beta1.metrics.k8s.io

Namespace:

Labels: k8s-app=metrics-server

Annotations: API Version: apiregistration.k8s.io/v1

Kind: APIService

Metadata:

Creation Timestamp: 2022-11-15T07:43:21Z

Resource Version: 88519224

Self Link: /apis/apiregistration.k8s.io/v1/apiservices/v1beta1.metrics.k8s.io

UID: 4fd0cbfe-b479-4810-95b8-5bf1161c24d3

Spec:

Group: metrics.k8s.io

Group Priority Minimum: 100

Insecure Skip TLS Verify: true

Service:

Name: metrics-server

Namespace: kube-system

Port: 443

Version: v1beta1

Version Priority: 100

Status:

Conditions:

Last Transition Time: 2022-11-15T07:43:21Z

Message: failing or missing response from https://172.19.251.11:10250/apis/metrics.k8s.io/v1beta1: Get https://172.19.251.11:10250/apis/metrics.k8s.io/v1beta1: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Reason: FailedDiscoveryCheck

Status: False

Type: Available

Events: <none>

发现apiservice的通过聚合接口apiregistration.k8s.io/v1 查询 metrics endpoints 网络不通

根因

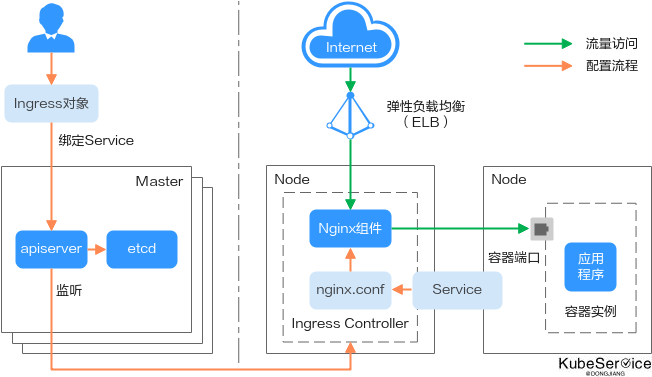

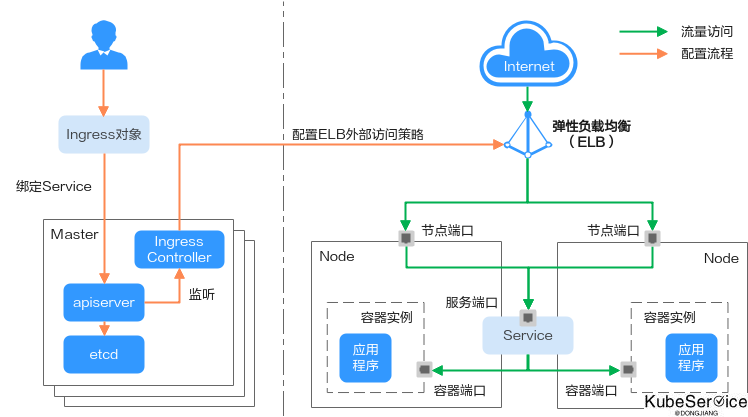

由于托管k8s, 以上 ingress-controller 只是方向代理了 apiserver 接口。

最终问题:对于apiserver 调用 node上的addon pod 是通过定死几个为数不多几个endpoints 去访问

因此,这种集群,对于 custom webhook 和 custom controller 的调用,是不能生效的

业界华为云CCI架构

按上诉方式, ingress-controller 反向代理 apiserver 接口; ELB + 80/443 node port 反向代理集群中Service

完成了 一个反向代理 + 正向代理, 完成 控制面 和 数据面 交叉请求!

「如果这篇文章对你有用,请随意打赏」

如果这篇文章对你有用,请随意打赏

使用微信扫描二维码完成支付