Kubernetes APIServer 内存爆满

现象

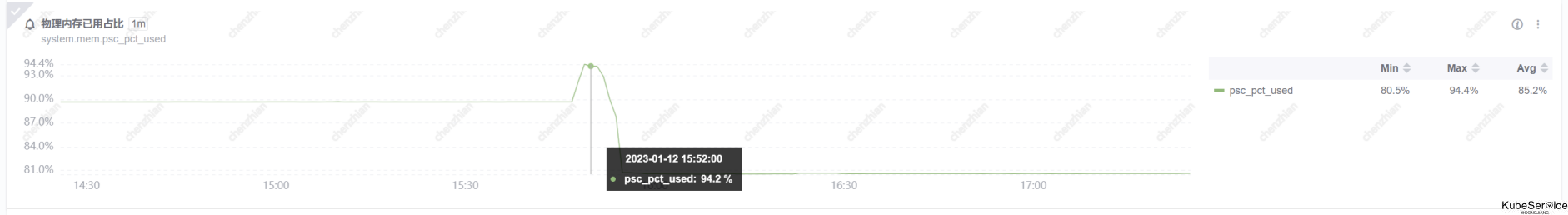

线上Kubernetes集群, 一共5台机器,其中4台机器内存80%+,1台机器内存3.59%, 其中一台master apiserver Node 内存飙升到98%后,OOM掉后,重启了apiserver

问题分析

apiserver内存持续升高,肯定是有资源在堆内存里申请了空间但是没有释放。首先考虑是否是因为建立了太多的链接导致的,使用如下指令查询apiserver链接数:

$ netstat -nat | grep -i "6443" | wc -l

1345

发现链接数在1300+, 对于128Core和745G内存机器多并不算多。

继续分析只能考虑导出kube-apiserver的heap文件来查看其详细的内存分布。

这种方式需要使用go语言包的pprof工具,下面详细讲解go tool pprof 工具的使用以及kube-apiserver的配置

go tool pprof工具使用

kubernetes 1.18这个功能是默认打开的, 但线上集群是1.16, 需要手动打开

$ cat ./manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

...

- --profiling=true

使用 端口转发,将Pod端口转发到本地端口上

$ kubectl port-forward kube-apiserver-8pmpz 6443:6443

$ go tool pprof http://127.0.0.1:6443/debug/pprof/heap

Fetching profile over HTTP from http://127.0.0.1:6443/debug/pprof/heap

Saved profile in /root/pprof/pprof.kube-apiserver.alloc_objects.alloc_space.inuse_objects.inuse_space.004.pb.gz

File: kube-apiserver

Type: inuse_space

Time: Dec 10, 2020 at 2:46pm (CST)

Entering interactive mode (type "help" for commands, "o" for options)

(pprof)top 20

Showing nodes accounting for 11123.22MB, 95.76% of 11616.18MB total

Dropped 890 nodes (cum <= 58.08MB)

Showing top 20 nodes out of 113

flat flat% sum% cum cum%

9226.15MB 79.43% 79.43% 9226.15MB 79.43% bytes.makeSlice

1122.61MB 9.66% 89.09% 1243.65MB 10.71% k8s.io/kubernetes/vendor/k8s.io/api/core/v1.(*ConfigMap).Unmarshal

139.55MB 1.20% 90.29% 153.05MB 1.32% k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/apis/meta/v1.(*ObjectMeta).Unmarshal

120.53MB 1.04% 91.33% 165.03MB 1.42% encoding/json.(*decodeState).objectInterface

117.29MB 1.01% 92.34% 117.29MB 1.01% reflect.unsafe_NewArray

108.03MB 0.93% 93.27% 108.03MB 0.93% reflect.mapassign

66.51MB 0.57% 93.84% 66.51MB 0.57% k8s.io/kubernetes/vendor/github.com/json-iterator/go.(*Iterator).ReadString

62.51MB 0.54% 94.38% 62.51MB 0.54% k8s.io/kubernetes/vendor/k8s.io/apiserver/pkg/registry/generic.ObjectMetaFieldsSet

61.51MB 0.53% 94.91% 61.51MB 0.53% k8s.io/kubernetes/vendor/k8s.io/apiextensions-apiserver/pkg/registry/customresource.objectMetaFieldsSet

43.01MB 0.37% 95.28% 137.03MB 1.18% k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime.structToUnstructured

18MB 0.15% 95.43% 183.61MB 1.58% k8s.io/kubernetes/vendor/k8s.io/apiserver/pkg/storage/cacher.(*watchCache).Replace

13.50MB 0.12% 95.55% 137.03MB 1.18% k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime.toUnstructured

9MB 0.077% 95.63% 237.54MB 2.04% k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/apis/meta/v1/unstructured.unstructuredJSONScheme.decodeToUnstructured

7.50MB 0.065% 95.69% 144.53MB 1.24% k8s.io/kubernetes/vendor/k8s.io/apimachinery/pkg/runtime.(*unstructuredConverter).ToUnstructured

6MB 0.052% 95.74% 123.29MB 1.06% reflect.MakeSlice

1.50MB 0.013% 95.76% 8944.02MB 77.00% encoding/json.mapEncoder.encode

看到这边数据到,问题基本明朗了。configmap瞬间大批量操作(平均每个configmap大小在347KB),导致APIServer去list/update configmap时无法一次获取到,就会一直创建slice, 从而使APIServer耗尽内存。

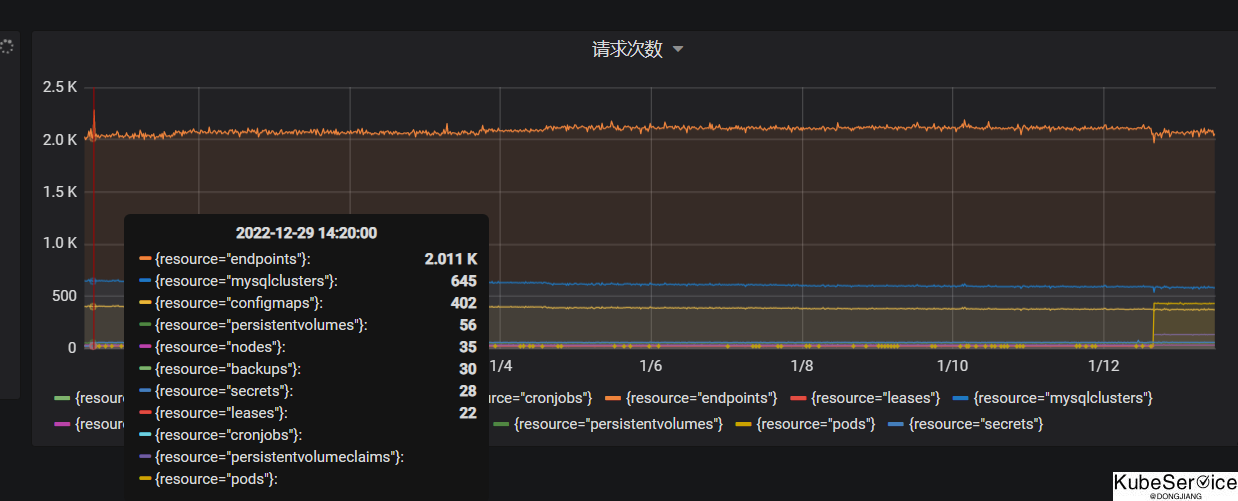

业务请求表现

configmap 和 Pod 业务瞬间瞬间变大,导致host内存耗尽

configmap 和 Pod 业务瞬间瞬间变大,导致host内存耗尽

解决方案

-

监控好每台机器内存,安全水位线60%,快速扩容apiserver服务

-

升级1.18以上版本kubernetes,开启

goaway=0.001

「如果这篇文章对你有用,请随意打赏」

如果这篇文章对你有用,请随意打赏

使用微信扫描二维码完成支付