1. 现象

线上k8s集群报警,部署带有PV/PVC的pod,pod启动完成时间长达4min+. 包括 创建PVC、创建PV,PVC变更bound状态时间等

2. 排查思路

集群规模信息查看:

apps@WXJD-PSC-xxxx-xxx ~$ kubectl get pv | wc -l

2688

apps@WXJD-PSC-xxxx-xxx ~$ kubectl get PVC -A | wc -l

1171

apps@WXJD-PSC-xxxx-xxx ~$ kubectl get node | wc -l

217

整个集群中216个node, 2687个pv 和 1170个pvc

2.1 底层csi返回异常或者重试导致

底层使用 rbd模式ceph-csi卷, 返回结果延期。

但是冲csi plugin 和csi provisioner中观察日志,其中无明显错误

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�8�9�5�4�0�7� � � � � � � �1� �c�o�n�t�r�o�l�l�e�r�.�g�o�:�1�4�7�1�]� �d�e�l�e�t�e� �"�p�v�c�-�9�f�2�4�3�6�1�e�-�9�d�1�8�-�4�0�2�5�-�9�6�a�4�-�b�6�c�5�c�0�7�b�8�5�b�1�"�:� �s�t�a�r�t�e�d�

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�5�1�9�4�1� � � � � � � �1� �c�o�n�n�e�c�t�i�o�n�.�g�o�:�1�8�3�]� �G�R�P�C� �c�a�l�l�:� �/�c�s�i�.�v�1�.�C�o�n�t�r�o�l�l�e�r�/�D�e�l�e�t�e�V�o�l�u�m�e�

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�5�1�9�5�3� � � � � � � �1� �c�o�n�n�e�c�t�i�o�n�.�g�o�:�1�8�4�]� �G�R�P�C� �r�e�q�u�e�s�t�:� �{�"�s�e�c�r�e�t�s�"�:�"�*�*�*�s�t�r�i�p�p�e�d�*�*�*�"�,�"�v�o�l�u�m�e�_�i�d�"�:�"�0�0�0�1�-�0�0�2�4�-�7�5�e�3�f�3�3�f�-�c�0�c�b�-�4�0�5�3�-�a�8�e�b�-�1�c�c�9�a�5�6�7�6�f�8�7�-�0�0�0�0�0�0�0�0�0�0�0�0�0�0�0�3�-�8�a�8�d�b�a�6�e�-�1�8�1�3�-�1�1�e�e�-�b�3�b�f�-�e�a�f�9�d�2�7�e�f�1�c�9�"�}�

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�4�8�1� � � � � � � �1� �c�o�n�n�e�c�t�i�o�n�.�g�o�:�1�8�6�]� �G�R�P�C� �r�e�s�p�o�n�s�e�:� �{�}�

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�5�1�6� � � � � � � �1� �c�o�n�n�e�c�t�i�o�n�.�g�o�:�1�8�7�]� �G�R�P�C� �e�r�r�o�r�:� �r�p�c� �e�r�r�o�r�:� �c�o�d�e� �=� �I�n�t�e�r�n�a�l� �d�e�s�c� �=� �r�b�d� �c�s�i�-�v�o�l�-�8�a�8�d�b�a�6�e�-�1�8�1�3�-�1�1�e�e�-�b�3�b�f�-�e�a�f�9�d�2�7�e�f�1�c�9� �i�s� �s�t�i�l�l� �b�e�i�n�g� �u�s�e�d�

�E�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�5�4�1� � � � � � � �1� �c�o�n�t�r�o�l�l�e�r�.�g�o�:�1�4�8�1�]� �d�e�l�e�t�e� �"�p�v�c�-�9�f�2�4�3�6�1�e�-�9�d�1�8�-�4�0�2�5�-�9�6�a�4�-�b�6�c�5�c�0�7�b�8�5�b�1�"�:� �v�o�l�u�m�e� �d�e�l�e�t�i�o�n� �f�a�i�l�e�d�:� �r�p�c� �e�r�r�o�r�:� �c�o�d�e� �=� �I�n�t�e�r�n�a�l� �d�e�s�c� �=� �r�b�d� �c�s�i�-�v�o�l�-�8�a�8�d�b�a�6�e�-�1�8�1�3�-�1�1�e�e�-�b�3�b�f�-�e�a�f�9�d�2�7�e�f�1�c�9� �i�s� �s�t�i�l�l� �b�e�i�n�g� �u�s�e�d�

�W�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�5�6�9� � � � � � � �1� �c�o�n�t�r�o�l�l�e�r�.�g�o�:�9�8�9�]� �R�e�t�r�y�i�n�g� �s�y�n�c�i�n�g� �v�o�l�u�m�e� �"�p�v�c�-�9�f�2�4�3�6�1�e�-�9�d�1�8�-�4�0�2�5�-�9�6�a�4�-�b�6�c�5�c�0�7�b�8�5�b�1�"�,� �f�a�i�l�u�r�e� �1�1�6�

�E�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�5�9�0� � � � � � � �1� �c�o�n�t�r�o�l�l�e�r�.�g�o�:�1�0�0�7�]� �e�r�r�o�r� �s�y�n�c�i�n�g� �v�o�l�u�m�e� �"�p�v�c�-�9�f�2�4�3�6�1�e�-�9�d�1�8�-�4�0�2�5�-�9�6�a�4�-�b�6�c�5�c�0�7�b�8�5�b�1�"�:� �r�p�c� �e�r�r�o�r�:� �c�o�d�e� �=� �I�n�t�e�r�n�a�l� �d�e�s�c� �=� �r�b�d� �c�s�i�-�v�o�l�-�8�a�8�d�b�a�6�e�-�1�8�1�3�-�1�1�e�e�-�b�3�b�f�-�e�a�f�9�d�2�7�e�f�1�c�9� �i�s� �s�t�i�l�l� �b�e�i�n�g� �u�s�e�d�

�I�0�7�0�4� �0�8�:�2�8�:�5�7�.�9�8�1�6�6�4� � � � � � � �1� �e�v�e�n�t�.�g�o�:�2�8�5�]� �E�v�e�n�t�(�v�1�.�O�b�j�e�c�t�R�e�f�e�r�e�n�c�e�{�K�i�n�d�:�"�P�e�r�s�i�s�t�e�n�t�V�o�l�u�m�e�"�,� �N�a�m�e�s�p�a�c�e�:�"�"�,� �N�a�m�e�:�"�p�v�c�-�9�f�2�4�3�6�1�e�-�9�d�1�8�-�4�0�2�5�-�9�6�a�4�-�b�6�c�5�c�0�7�b�8�5�b�1�"�,� �U�I�D�:�"�4�c�7�2�c�3�b�5�-�e�6�4�6�-�4�9�3�9�-�b�7�4�2�-�5�6�9�7�2�3�5�0�1�a�1�9�"�,� �A�P�I�V�e�r�s�i�o�n�:�"�v�1�"�,� �R�e�s�o�u�r�c�e�V�e�r�s�i�o�n�:�"�4�4�5�4�8�7�5�3�1�8�"�,� �F�i�e�l�d�P�a�t�h�:�"�"�}�)�:� �t�y�p�e�:� �'�W�a�r�n�i�n�g�'� �r�e�a�s�o�n�:� �'�V�o�l�u�m�e�F�a�i�l�e�d�D�e�l�e�t�e�'� �r�p�c� �e�r�r�o�r�:� �c�o�d�e� �=� �I�n�t�e�r�n�a�l� �d�e�s�c� �=� �r�b�d� �c�s�i�-�v�o�l�-�8�a�8�d�b�a�6�e�-�1�8�1�3�-�1�1�e�e�-�b�3�b�f�-�e�a�f�9�d�2�7�e�f�1�c�9� �i�s� �s�t�i�l�l� �b�e�i�n�g� �u�s�e�d�

�I�0�7�0�4� �0�8�:�2�8�:�5�8�.�3�6�7�2�3�7� � � � � � � �1� �l�e�a�d�e�r�e�l�e�c�t�i�o�n�.�g�o�:�2�7�8�]� �s�u�c�c�e�s�s�f�u�l�l�y� �r�e�n�e�w�e�d� �l�e�a�s�e� �k�u�b�e�-�s�y�s�t�e�m�/�r�b�d�-�c�s�i�-�c�e�p�h�-�c�o�m�

其中,无具体 AD Controller绑定异常. 只有一个volume不能被删除, 是另一个问题

2.2 Controller Manager中的pv controller 绑定进入队列Queue等待导致

2023-07-06T14:34:59.939392489+08:00 stderr F I0706 14:34:59.939362 1 pv_controller_base.go:640] storeObjectUpdate updating volume "pvc-e61e0904-a224-4507-9e42-8c15a0d72884" with version 680511035

2023-07-06T14:34:59.939397062+08:00 stderr F I0706 14:34:59.939371 1 pv_controller.go:543] synchronizing PersistentVolume[pvc-e61e0904-a224-4507-9e42-8c15a0d72884]: phase: Released, bound to: "80cd9e90/data-ae8a1da801b-1702e00-0 (uid: e61e0904-a224-4507-9e42-8c15a0d72884)", boundByController: false

2023-07-06T14:34:59.939406204+08:00 stderr F I0706 14:34:59.939380 1 pv_controller.go:578] synchronizing PersistentVolume[pvc-e61e0904-a224-4507-9e42-8c15a0d72884]: volume is bound to claim 80cd9e90/data-ae8a1da801b-1702e00-0

2023-07-06T14:34:59.939408161+08:00 stderr F I0706 14:34:59.939317 1 pv_controller.go:520] synchronizing bound PersistentVolumeClaim[67828ece/data-a72c7567ce3-8477300-0]: claim is already correctly bound

2023-07-06T14:34:59.939414644+08:00 stderr F I0706 14:34:59.939388 1 pv_controller.go:1012] binding volume "data-a72c7567ce3-8477300-0-wxjd-psc-p15f1-spod11-pm-os01-mysql-lk8s-06-5d574457" to claim "67828ece/data-a72c7567ce3-8477300-0"

2023-07-06T14:34:59.939417139+08:00 stderr F I0706 14:34:59.939390 1 pv_controller.go:612] synchronizing PersistentVolume[pvc-e61e0904-a224-4507-9e42-8c15a0d72884]: claim 80cd9e90/data-ae8a1da801b-1702e00-0 not found

2023-07-06T14:34:59.939419053+08:00 stderr F I0706 14:34:59.939394 1 pv_controller.go:910] updating PersistentVolume[data-a72c7567ce3-8477300-0-wxjd-psc-p15f1-spod11-pm-os01-mysql-lk8s-06-5d574457]: binding to "67828ece/data-a72c7567ce3-8477300-0"

2023-07-06T14:34:59.939420961+08:00 stderr F I0706 14:34:59.939395 1 pv_controller.go:1108] reclaimVolume[pvc-e61e0904-a224-4507-9e42-8c15a0d72884]: policy is Delete

2023-07-06T14:34:59.939430994+08:00 stderr F I0706 14:34:59.939402 1 pv_controller.go:1753] scheduleOperation[delete-pvc-e61e0904-a224-4507-9e42-8c15a0d72884[67d5e9c4-57e3-4343-8747-6b7ae83bac5e]]

2023-07-06T14:34:59.93943311+08:00 stderr F I0706 14:34:59.939404 1 pv_controller.go:922] updating PersistentVolume[data-a72c7567ce3-8477300-0-wxjd-psc-p15f1-spod11-pm-os01-mysql-lk8s-06-5d574457]: already bound to "67828ece/data-a72c7567ce3-8477300-0"

2023-07-06T14:34:59.939434888+08:00 stderr F I0706 14:34:59.939406 1 pv_controller.go:1764] operation "delete-pvc-e61e0904-a224-4507-9e42-8c15a0d72884[67d5e9c4-57e3-4343-8747-6b7ae83bac5e]" is already running, skipping

2023-07-06T14:35:01.09077583+08:00 stderr F I0706 14:35:01.087787 1 request.go:600] Waited for 1m1.146385902s due to client-side throttling, not priority and fairness, request: GET:https://[2409:8c85:2021:3000::ae2:cd08]:6443/api/v1/persistentvolumes/pvc-e61e0904-a224-4507-9e42-8c15a0d72884

2023-07-06T14:35:01.121686014+08:00 stderr F I0706 14:35:01.121578 1 request.go:600] Waited for 291.938256ms due to client-side throttling, not priority and fairness, request: PUT:https://[2409:8c85:2021:3000::ae2:cd08]:6443/apis/batch/v1/namespaces/90552e00/cronjobs/a239855acd7-238ff-4e041-clean-h45bc/status

2023-07-06T14:35:01.130820524+08:00 stderr F I0706 14:35:01.130752 1 pv_controller.go:1244] Volume "pvc-e61e0904-a224-4507-9e42-8c15a0d72884" is already being deleted

跟踪8c15a0d72884, 观察到 controller-manager通过client-go对外请求的brust被限流了

基本上是由于brust ratelimit导致, 限流延迟处理了;

3. 根本原因

查看其中cronjob的自定义的pv/pvc 定时查询任务。 业务中有大量的cronjob 对集群中的未被绑定的pv 和 需要删除的pv 做删除处理。

主要是由于cronjob使用问题

正常理想情况

正常理想情况

当

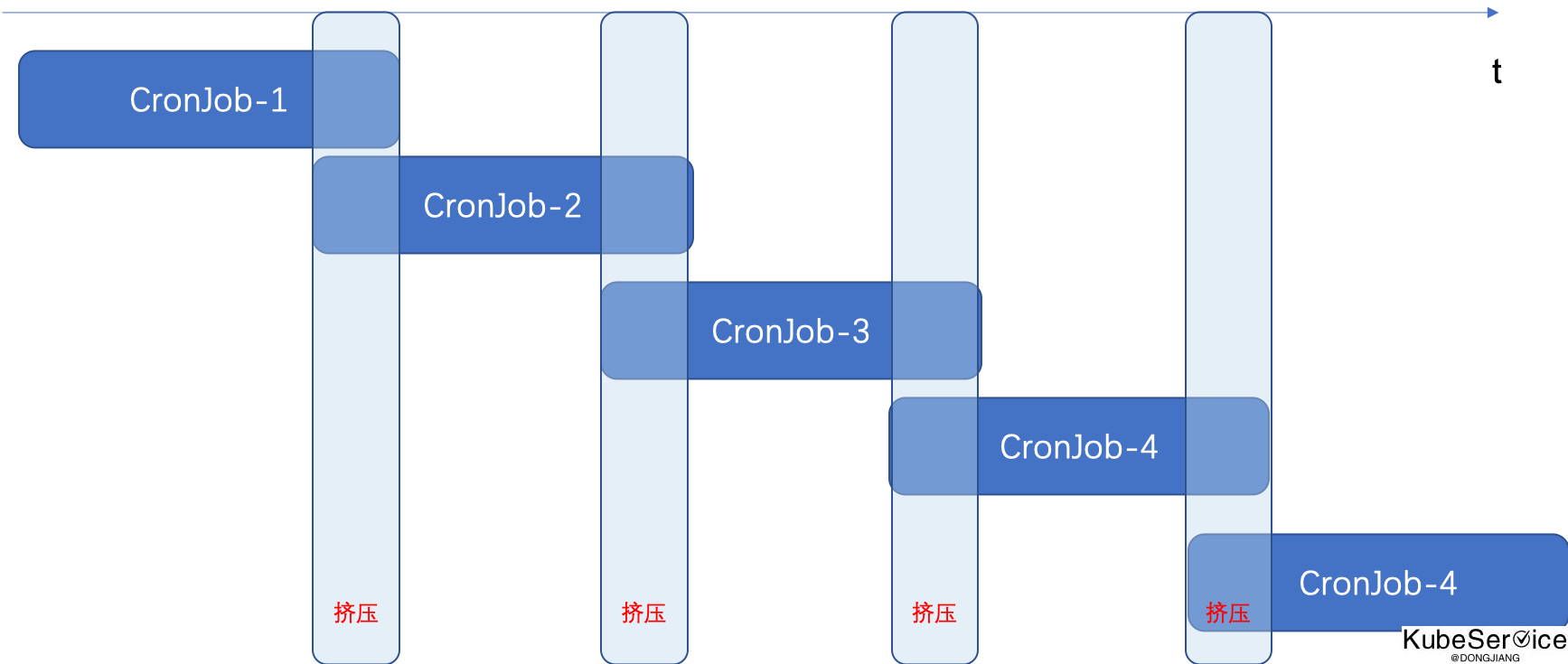

当集群中pv和pvc慢慢变多,一次cronjob的执行结束就会变长,会和下一次有重合。 导致brust请求变多

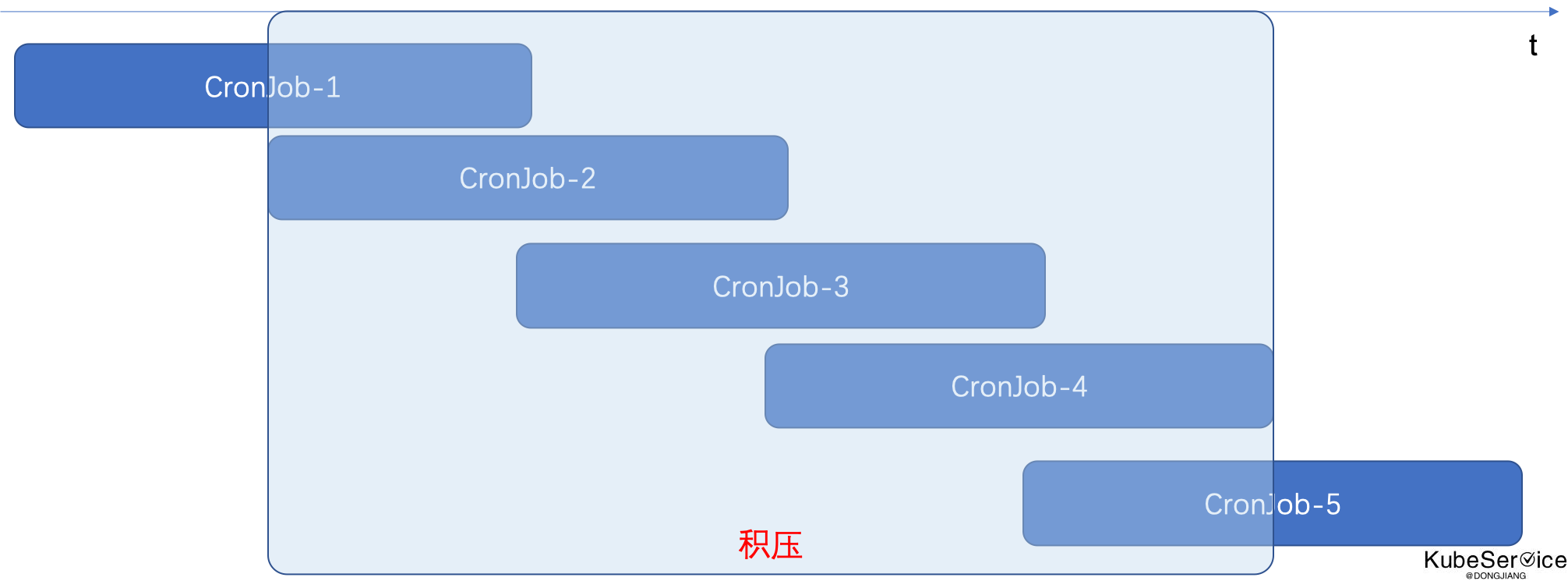

更有甚至,多次cronjob重合,大量积压

更有甚至,多次cronjob重合,大量积压

4. 解决方案

短期(线上恢复)

controller-manager 默认的

--concurrent-cron-job-syncs=5 #此配置需要k8s version >= 1.27.x

--kube-api-qps=20

--kube-api-burst=30

更改为

--concurrent-cron-job-syncs=50 #此配置需要k8s version >= 1.27.x

--kube-api-qps=100

--kube-api-burst=500

长期

需要将自定义的cronjob服务,变更为service服务

通过 while 确保一次循环完成后,再进行另一次循环check. 避免cornjob服务导致请求挤压

like:

func CheckAll() {

var wg sync.WaitGroup

concurrent := make(chan bool, 1)

for {

concurrent <- true

wg.Add(1)

go func() {

// do somethings

<-concurrent

}()

}

wg.Wait()

}

「如果这篇文章对你有用,请随意打赏」

如果这篇文章对你有用,请随意打赏

使用微信扫描二维码完成支付